1. Domain Adaptive Object Detection via Balancing between Self-Training and Adversarial Learning (Accepted @TPAMI2023)

2. Synergizing between Self-Training and Adversarial Learning for Domain Adaptive Object Detection (Accepted @NeurIPS2021)

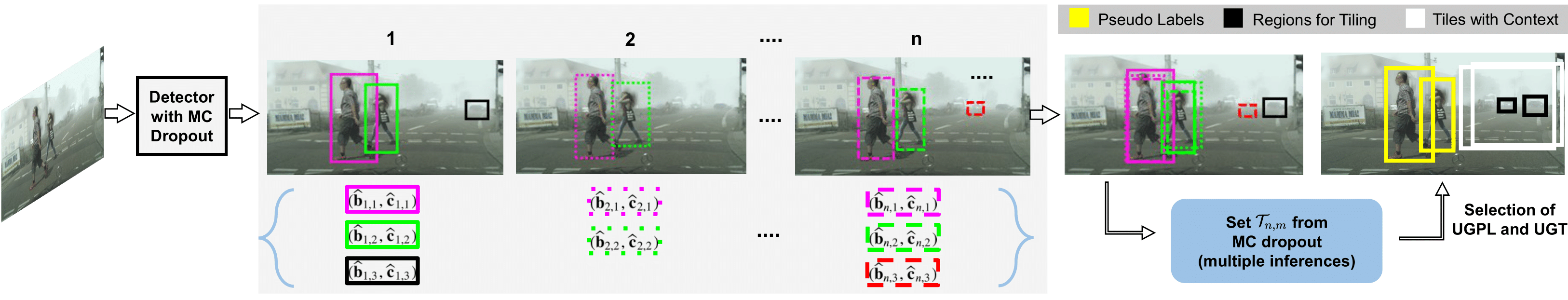

Figure: An illustration on which detections will be considered as pseudo-labels and which for extracting tiles. More certain detections, such as pedestrians are taken as pseudo-labels, whereas relatively uncertain ones, like cars under fog, are used for extracting tiles.

Abstract

Deep learning based object detectors struggle generalizing to a new target domain bearing significant variations in object and background. Most current methods align domains by using image or instance-level adversarial feature alignment. This often suffers due to unwanted background and lacks class-specific alignment. A straightforward approach to promote class-level alignment is to use high confidence predictions on unlabeled domain as pseudo-labels. These predictions are often noisy since model is poorly calibrated under domain shift. In this paper, we propose to leverage model’s predictive uncertainty to strike the right balance between adversarial feature alignment and class-level alignment. We develop a technique to quantify predictive uncertainty on class assignments and bounding-box predictions. Model predictions with low uncertainty are used to generate pseudo-labels for self-training, whereas the ones with higher uncertainty are used to generate tiles for adversarial feature alignment. This synergy between tiling around uncertain object regions and generating pseudo-labels from highly certain object regions allows capturing both image and instance-level context during the model adaptation. We report thorough ablation study to reveal the impact of different components in our approach. Results on five diverse and challenging adaptation scenarios show that our approach outperforms existing state-of-the-art methods with noticeable margins.

Contribution

Our key contributions include the following:

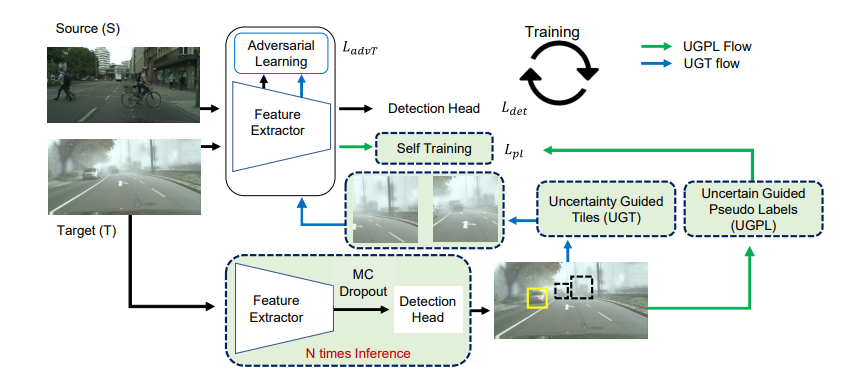

(1) We introduce a new uncertainty-guided framework that strikes the right balance between self-training and adversarial feature alignment for adapting object detection methods. Both pseudo-labelling for self-training and tiling for adversarial alignment are impactful due to their simplicity, generality and ease of implementation.

(2) We propose a method for estimating the object detection uncertainty via taking into account variations in both the localization prediction and confidence prediction across Monte-Carlo dropout inferences.

(3) We show that, selecting pseudo-labels with low uncertainty and using relatively uncertain regions for adversarial alignment, it is possible to address the poor calibration caused by domain shift, and hence improve model’s generalization across domains.

(4) Unlike most of the previous methods, we build on computationally efficient one-stage anchor-less object detectors and achieve state-of-the-art results with notable margins across various adaptation scenarios.

In addition, the current work makes the following new contributions.

(5) We revisit the uncertainty quantification mechanism for object detection to incorporate a new constraint for selecting pseudo-labels.

(6) Extending the tiling set: The tile set is extended via relaxing the uncertainty-guided tiling constraint and including randomly sampled full image.

(7) We include extensive ablation studies to analyze current work and draw comparisons with its previously published conference version.

(8) Finally, we include experimental results on two new large-scale and challenging adaptation scenarios, encompassing severe domain shifts.

Method (Extended)

Revisiting uncertainty quantification

We note that previous work relies on averaged class confidences as a surrogate measure of detection model uncertainty in its class assignment and object localization. It doesn’t take into account the spread of the distribution, and so could be misleading for predictions with relatively greater localization uncertainty. To this end, we revisit the uncertainty quantification and introduce variance across class confidences with the averaged class confidences. Since the variance across class confidences is a much better estimate of the predictive uncertainty, using them in conjunction with averaged class confidences will allow us to further improve the synergy between self-training and adversarial alignment through facilitating more accurate pseudo-labeling and informed tiling.

Uncertainty-guided pseudo-labeling with a new constraint

Previous work selects pseudo-labels using averaged class confidences as uncertainty measure and detection consistency for self-training. This allows us to choose accurate pseudo-labels over sole confidence-based criterion, which is crucial for effective adaptation and also improves model calibration under domain shift. To further improve the selection of pseudo-labels, we propose to use average class confidences and variance across class confidences as the model’s detection uncertainty along with the detection consistency

Uncertainty-guided tiling with extended set

In previous work, the detected regions with certain criteria are used to extract tiles for adversarial learning. We observe that the regions that fail the uncertainty constraint but satisfy the detection consistency constraint were not utilized for extracting tiles. This rather limits the space of uncertain detections (possibly containing some object information) that can be potentially exploited for enhanced adversarial alignment. Formally, we choose a region for extracting a tile that fulfills the following criteria. Further, along with the extracted tiles, we also randomly sample the full image in the mini-batch to extend scale information.

Figure: Overall architecture of our method. Fundamentally, it is a one-stage detector with an adversarial feature alignment stage. We propose uncertainty-guided self training with pseudo-labels (UGPL) and uncertainty-guided adversarial alignment via tiling (UGT) (in dotted boxes). UGPL produces accurate pseudo-labels in target image which are used in tandem with ground-truth labels in source image for training. UGT extracts tiles around possibly object-like regions in target image which are used with randomly extracted tiles around ground-truth labels in source domain for adversarial feature alignment.

Datasets

Cityscapes dataset features images of road and street scenes and offers 2975 and 500 examples for training and validation, respectively. It comprises following categories: {person, rider, car, truck, bus, train, motorbike, and bicycle}.

Foggy Cityscapes dataset is constructed using Cityscapes dataset by simulating foggy weather utilizing depth maps provided in Cityscapes with three levels of foggy weather.

Sim10k dataset is a collection of synthesized images, comprising 10K images and their corresponding bounding box annotations.

KITTI dataset bears resemblance to Cityscapes as it features images of road scenes with wide view of area, except that KITTI images were captured with a different camera setup.

Following existing works, we consider car class for experiments when adapting from KITTI or Sim10k.

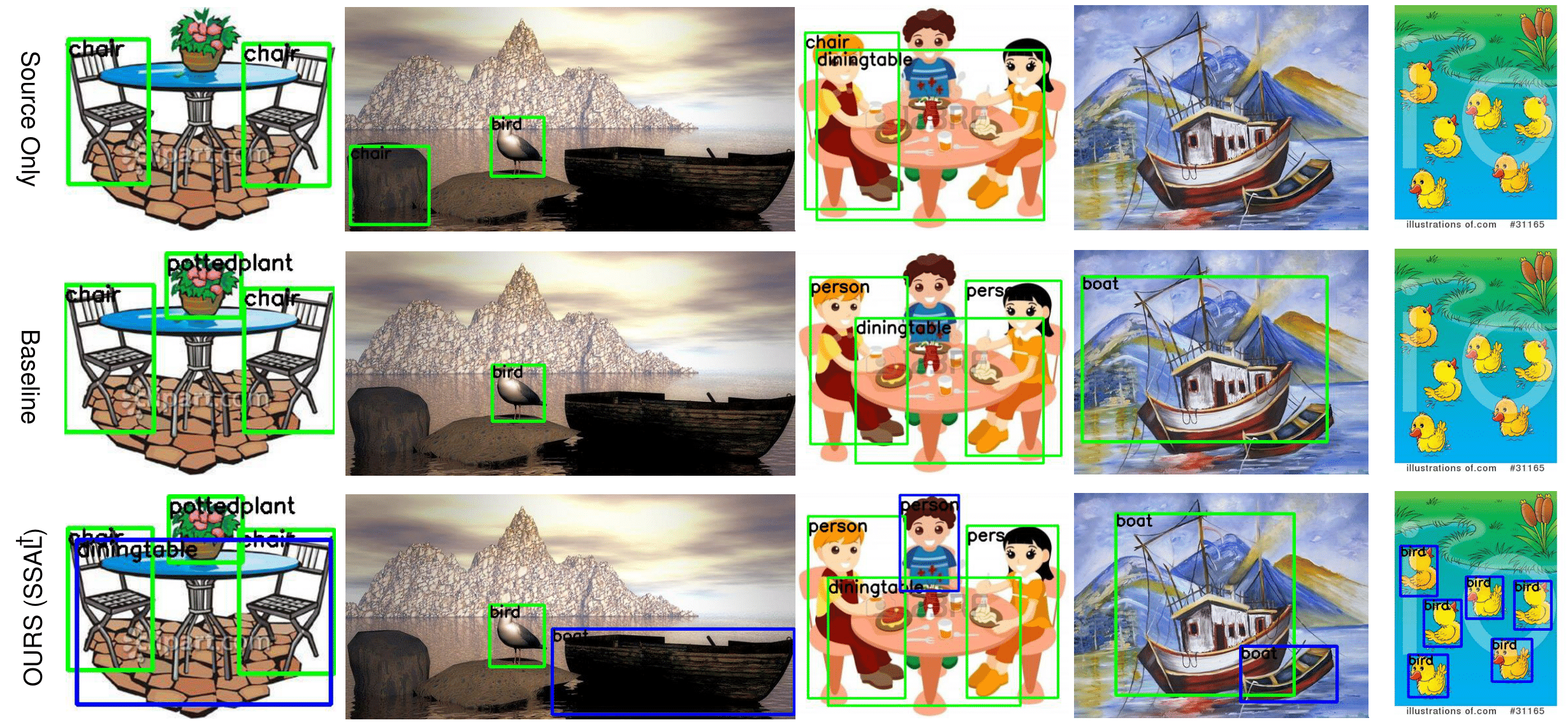

PASCAL VOC is a well-known dataset in object detection literature, containing 20 categories. This dataset offers real images with bounding box and category level information.

Clipart1k contains artistic images with 1k samples. This dataset has same 20 categories as in PASCAL VOC.

BDD100k is a large-scale dataset, containing 100k images with bounding box and class level annotations. Out of these images, 70k are in training set and 10k is in the validation set. Following the literature, we make a subset of 36.7k images from the training set and 5.2k images from the validation set that has daylight conditions and used with common categories as in Cityscapes.

Qualitative Results

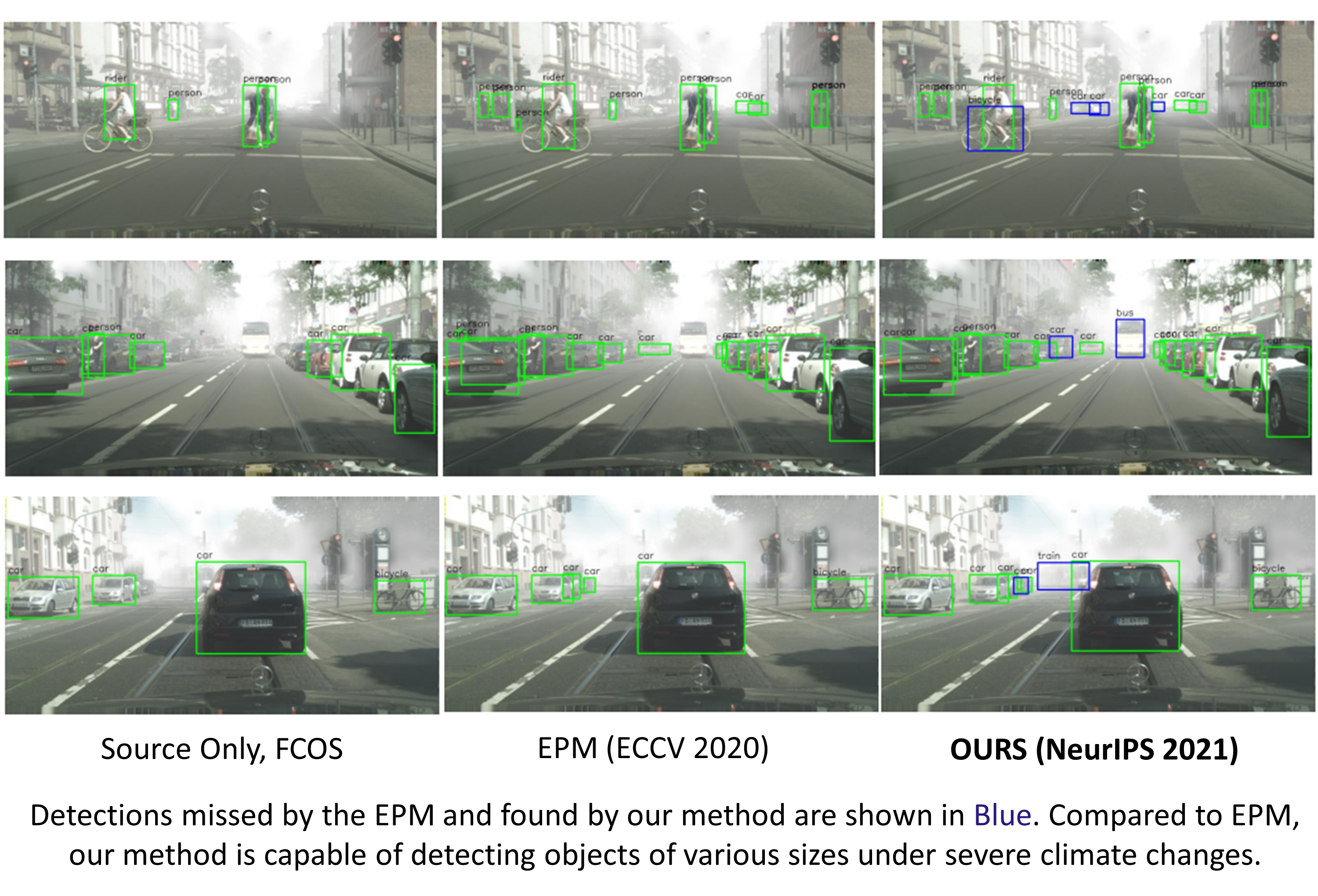

Detections missed by the baseline and found by our method (extended) are shown in Blue. Our method detects more number of objects under large domain shift compared to EPM.

Publication

Authors’ Information:

Muhammad Akhtar Munir, PhD Student, Intelligent Machines Lab, ITU, Lahore, Pakistan

Email: akhtar.munir@itu.edu.pk

Web: https://scholar.google.com/

Dr. Muhammad Haris Khan, Assistant Professor, MBZUAI, Abu Dhabi, UAE

Email: muhammad.haris@mbzuai.ac.ae

Web: https://mbzuai.ac.ae/pages/muhammad-haris/

Dr. Muhammad Saquib Sarfraz, Senior Scientist & Lecturer, KIT, Karlsruhe, Germany

Email: muhammad.sarfraz@kit.edu

Web: https://ssarfraz.github.io/

Dr. Mohsen Ali, Associate Professor, Intelligent Machines Lab, ITU, Lahore, Pakistan

Email: mohsen.ali@itu.edu.pk

Web: https://im.itu.edu.pk/

Bibtex

author={Munir, Muhammad Akhtar and Khan, Muhammad Haris and Sarfraz, M. Saquib and Ali, Mohsen},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={Domain Adaptive Object Detection via Balancing between Self-Training and Adversarial Learning},

year={2023},

volume={45},

number={12},

pages={14353-14365},

doi={10.1109/TPAMI.2023.3290135}}